Machine Learning / AI Development

Realising software with machine learning and artificial intelligence in Zurich

We are Machine Learning / AI experts from Switzerland

The employees of PolygonSoftware research the latest findings in the fields of machine learning and artificial intelligence at the University of Zurich and the ETH Zurich:

Our knowledge in the field of AI is up to date from the new Master's programme in Artificial Intelligence at the University of Zurich, which opened in 2020.

We have industry experience in the development of Deep Neural Networks and the Machine Learning process

We accompany your company from planning to the integration of machine learning models

In addition to knowledge in the field of machine learning, we are also experts in the field of data science and computer vision.

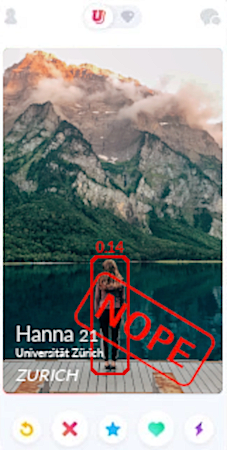

Our references in Machine Learning / AI Development.

Self-learning, intelligent algorithms are revolutionising entire industries and are responsible for the success of all major tech companies. PolygonSoftware develops machine learning algorithms for your company.

What is "Machine Learning" / "Artificial Intelligence"?

The term machine learning refers to algorithms that can learn a subject matter independently and produce new knowledge. In contrast to a traditional algorithm, in which the developer gives the computer precise step-by-step instructions on how the computer should solve the presented problem, a machine-learning-based algorithm is not told how to solve the underlying problem. The machine-learning algorithm itself is therefore responsible for finding the right steps that can solve the problem presented to it. It therefore not only finds the solution, but also the way to get to this solution, completely autonomously.

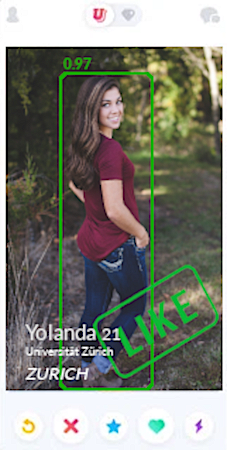

We speak of artificial intelligence when an algorithm (usually based on machine learning) can solve even complex tasks that require almost human intelligence. For example, one speaks of artificial intelligence when a machine-learning algorithm can identify images of objects faster, more reliably and without errors than a human. In some fields, artificial intelligence is even already superior to us humans, for example in recognising animals in pictures.

Why is "Machine Learning" / "Artificial Intelligence" so trendy?

Before the era of machine learning, problems could only be solved with computers if the programmers could understand the underlying problem so well that they could programme the computer with the steps necessary to solve it. Compared to humans, the computer's main advantage was that it could perform the calculation steps immensely faster than a human and did not make any mistakes. As computers became faster, however, the limitation of computer programmes increasingly became not the speed with which the calculation steps could be executed, but the knowledge of which steps actually lead to the goal. If the engineers could not find out which steps lead to the solution or did not even know what the solution might look like, the development of an algorithm to solve the problem was impossible.

With machine learning, however, this hurdle could be overcome. By cleverly programming machine-learning algorithms, developers can show a programme what the problem and its solution look like, and the algorithm finds the steps needed to reach that solution itself. This means that the algorithm can also apply the learned solution steps to similar problems for which the solution is still unknown and thus find the correct result. The algorithm is thus capable of learning and understanding a situation and adapting what it has learned for the solution of previously unsolved problems.

What types of "machine learning" algorithms are there?

Basically, there are two types of algorithms: Supervised and Unsupervised. The following sections list the two types and explain their differences.

Supervised Machine-Learning: Show Problem and Result, Learn the Solution Steps

If a machine-learning algorithm learns by being shown a problem and its solution hundreds of times, it is called a supervised-learning algorithm. By seeing problem-and-solution, the algorithm tries to find ways to infer from the problem to the solution. However, by already seeing the solution, the algorithm can assess its own precision and adjust its solution path to achieve better results. For this kind of autonomous learning to work, it is important to be able to repeatedly show the computer problem-and-solution so that the algorithm can undergo an evolution. For a supervised machine learning project to work, a large and clean data set is needed on which the algorithm can be trained. An example of a supervised machine learning algorithm would be the estimation of a house price based on house data.

The machine-learning algorithm should be able to infer the sales price Y on the basis of house data X. The house data includes the location of the house. The house data includes the location, the number of rooms, the floor and much more. For example, all house advertisements from real estate platforms such as ImmoScout24 or Homegate could be used as the underlying data set. To train the algorithm, it is shown the house data X and the sales price Y for each advertisement. The algorithm learns how to infer the price from the house data. Once the algorithm has been trained, it has learned the rules inside how a house price is composed of the house data. Now the algorithm can be used to independently set a price on a new house inventory: The algorithm applies the learned steps to the new listing and thereby generates a house price. The algorithm is thus able to provide a house price estimate for new listings.

Unsupervised machine learning: show the data, find interesting patterns and correlations in it.

The second category of machine-learning algorithms are unsupervised-learning algorithms. This type of algorithm is applied to data sets in which one suspects correlations, but these are not yet clear. The aim of the algorithm is therefore to analyse the data and discover regularities, anomalies or patterns. You give the algorithm a clearly defined goal, but you do not tell it what the solution looks like or even how it arrives at this solution. A simple example of this is the detection of anomalies: You give the algorithm the data sets and give it the goal of finding anomalies in this data set, but without telling the algorithm which data points are anomalous. The result, i.e. what these anomalies look like and which data points contain anomalies, is concealed from it, as is the solution path for finding these anomalies. Anomaly Detection is used in various industries, both for quality control and to detect illegal activities in banking by identifying atypical transactions on a bank account.

An example of an Unsupervised Algorithm for anomaly detection can therefore be easily considered in terms of quality assurance: At the end of a production line for ceramic cups, an image of each cup is taken from all sides. An anomaly detection algorithm is designed to determine for each cup whether it has any damage and thereby infer the quality of the product. To train this algorithm, it is shown thousands of photos of perfectly produced cups. The algorithm learns to recognise the characteristics of a cup: Smooth surfaces, round edges, curved shapes. If the algorithm is trained enough, it identifies these characteristics with every new cup that comes off the production line. However, if a previously unknown feature is identified, such as a straight, dark line in the surface caused by a crack, an anomaly is detected and an alarm can be sounded. The interesting thing is that the algorithm has never seen such an anomaly before, nor has it been taught that a surface crack is an anomaly.

What are Artificial Neural Networks (ANN)?

The term neural network describes a type of supervised machine learning algorithm that simulates the learning ability of the human brain. Findings from neurobiology and psychology are used to transfer the biological learning ability of humans into computer algorithms. The resulting research field is called neuroinformatics. By programming artificial synapses, brain-like structures can be programmed in the computer that can learn incredibly complex facts. Neural networks need a huge amount of data to train themselves and a lot of computer power to carry out this training. The advantage of neural networks is the variety of things they can learn. A neural network can learn to identify handwritten digits, but can also learn to estimate a house price in the same way.

Due to the variety of applications of neural networks, as well as their reliability and precision, they have become a go-to tool for many machine learning applications. Neural networks come in many forms, which are interesting for different applications. For example, one speaks of Deep Neural Networks when a complex network with many neuron layers is simulated, or of Convolutional Neural Networks when the neural network simulates the visual learning ability of the human brain.

If machine-learning algorithms learn themselves - why do they need developers?

Machine-learning algorithms are intelligent, but they are not general intelligence. This means that there is currently no single machine-learning algorithm that can be used for every problem. Rather, there is a multitude of algorithms and architectures that have proven themselves in various application areas. The machine learning developer has to master the following challenges:

1.) Data collection (data mining)

The basis of a machine learning project is the data. Depending on the complexity of the problem and the choice of algorithm, millions of data sets are needed to give the machine learning algorithm enough fodder to learn from. The first challenge for a developer is therefore to gather the right data and store it correctly. This process is also known as data mining. Data mining must ensure that the machine learning algorithm has all the important information in a form that is right for it. In the example of house data, for example, it is probably not enough to provide the algorithm with the longitude/latitude of the property. The house price usually does not correlate with the actual location, but often with the municipality - or even more specifically with the tax rate of the municipality. Perhaps the distance of a property to important places such as a school, shopping centre or railway station is also decisive. So all this information should be generated in a job ad based on longitude/latitude and provided to the machine learning algorithm for decision making.

2.) Clean data

To ensure that the machine-learning algorithm is not confused by useless, erroneous or even missing data, it is an essential step to analyse and clean the existing data. Depending on the data set, missing information, for example the floor in the house, can be replaced by generated data. Since a lot of data from the real world often lacks partial information, it is important that these information gaps can be closed. This process is called data cleaning.

3.) Machine-learning algorithm selection

There are various machine-learning algorithms that can be selected for similar problems. It is crucial for the developer to select the most promising algorithm that can generate the most promising results with the available data and training time. This step requires a lot of knowledge about the different machine learning models and their advantages and disadvantages.

4.) Set hyper-parameters

The most difficult thing about machine learning is to set the hyper-parameters correctly. A hyper-parameter is a setting that can be made to the machine learning model that changes the algorithm. You can think of it like an oven where you can set the temperature and ventilation. In this way, you can prepare different dishes with the same oven, e.g. bake bread, warm a casserole, etc. The oven can also be used to cook other dishes. Depending on how these settings are selected, the baking process is faster or there are problems, e.g. if the bread burns. Depending on the machine-leaning model, there are dozens of such hyperparameters. In the case of the neural network, for example, one has to decide how many neurons should be simulated and which activation function should be used. This step requires the machine-learning expert to have a very deep knowledge of the hyperparameters, how they affect the learning rate of the algorithm, and how their combination is optimal for the underlying problem.

5.) Train machine learning algorithm

Once the hyperparameters have been set, the machine learning algorithm is trained with the underlying data. Depending on the amount of data, the complexity of the algorithm and the computer power, the training can take from minutes to weeks. During the training, the machine-learning expert observes the training progress of the model and decides whether the learning rate of the model looks promising and whether the model generalises enough.

6.) Evaluation and analysis of the generated model

A machine learning algorithm can be analysed on various metrics. Depending on the use case, it is crucial that the algorithm can solve > XX% of the problems with XX% certainty in an accuracy of XX%. The metrics used to evaluate the success of the algorithm vary depending on the problem and the circumstances. If the generated model could not meet the requirements, the model and its results are analysed to conclude how it can be improved.

7.) Repetition: Improve the machine-learning algorithm.

Only in very few cases is the first-trained model already promising. The development of a machine-learning algorithm is an incremental process, because the exact functioning of a machine-learning based algorithm is often a black box. If the algorithm makes wrong decisions, the internal structures of the model can only be analysed to a limited extent to find out where the limitation comes from. It is therefore up to the machine learning expert to decide how to improve the model. Any of the previous steps can be used to do this: New data may need to be collected, the data may need to be cleaned better, the hyperparameters may need to be set differently, or the model may need to be trained differently. It is common to do this repetition several times until the optimal result is achieved.

8.) Integration of the model.

The result of the process described up to this point is a machine learning model that produces a reliable result based on the learned problem. In order to integrate this model into the effective process, it is necessary that all steps that were necessary for data cleaning and data preparation are also carried out in the final process. If the machine-learning model is integrated into the process, it offers a reliable and efficient added value in your value chain.

Hey! Interested in Machine Learning and Artificial Intelligence? Check out my latest AI research projects! I specialize in developing cutting-edge AI systems, including:

- ConceptFormer – Integrating large-scale knowledge graphs into LLMs

- Drone Simulation – AI-powered airspace allocation optimization

- Physical Sky Rendering Engine – Physics-based sunlight and sky modeling

Looking for AI collaboration? Feel free to reach out! I work as a Senior AI Consultant and frequently speak at AI events across Switzerland, including the Swiss AI Impact Forum.